- Fabi.ai Product Updates

- Posts

- Fabi.ai January Product Updates

Fabi.ai January Product Updates

Making AI data analysis work for everyone

Fabi is the only AI data analysis platform that is truly accessible to the entire team. If we can summarize the theme in January: Continuing to create a self-service analytics experience for all, with improvements all the way from small UI features down to the data.

Application connector enhancements

Our Fabi 2.0 launch was a big step forward. We introduced hundreds of application connectors, making us the only AI data analysis platform that can analyze your data wherever it lives. No migration, no copying data around.

Since launch, we've made two improvements based on what we're seeing from customers:

Automated data modeling for additional sources. For certain connectors like QuickBooks and Stripe, we've added dbt models that automatically transform your data to make it as AI-ready as possible. What does this mean in simpler terms? Analyst Agent can understand your data better right out of the gate: more accurate insights with zero manual setup on your end.

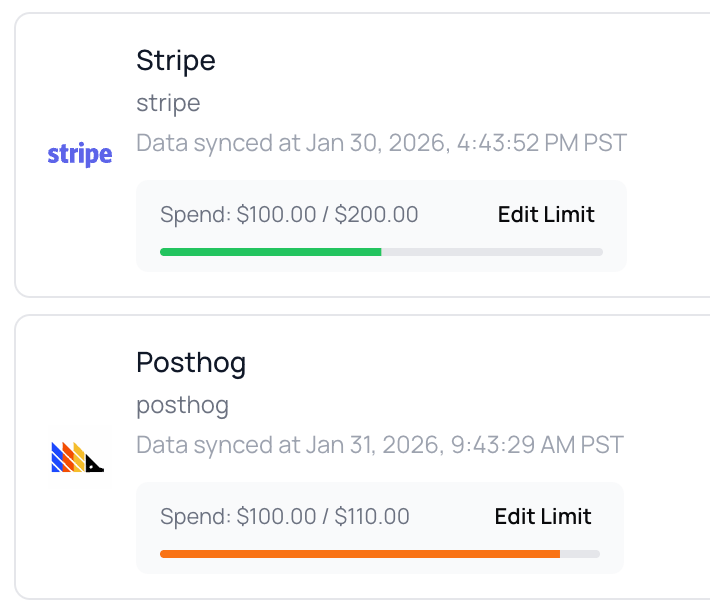

More transparency on usage and billing. In your data connector settings page, you can see exactly how many records have synced by source for the current month. You can also adjust spending limits to make sure you don't exceed your budget if there's a sudden unexpected surge in record updates.

Tip: If you're using an application connector in Fabi and think you could be getting more from Analyst Agent, reach out. We're adding new models all the time and we're happy to offer complimentary data engineering services to our Teams and Enterprise customers.

An AI that uses its brain…

...or whatever it is under the hood.

Rather than systematically attempting to respond to every prompt immediately, Analyst Agent will sometimes ask clarifying questions when it thinks the ask is ambiguous. Not only does this help you think through the specifics of what you need, but it ensures that the response you get is much more relevant to what you're actually trying to accomplish.

Quality of life improvements

You may have already noticed a few new features we've rolled out to make sure we continue providing the most powerful and safe analysis environment:

Table of contents in Smartbooks: Easily navigate your analysis

Code variable explorer: For the more technical crew, you can see all the variables in a given Smartbook and quickly jump to the code block that generated it.

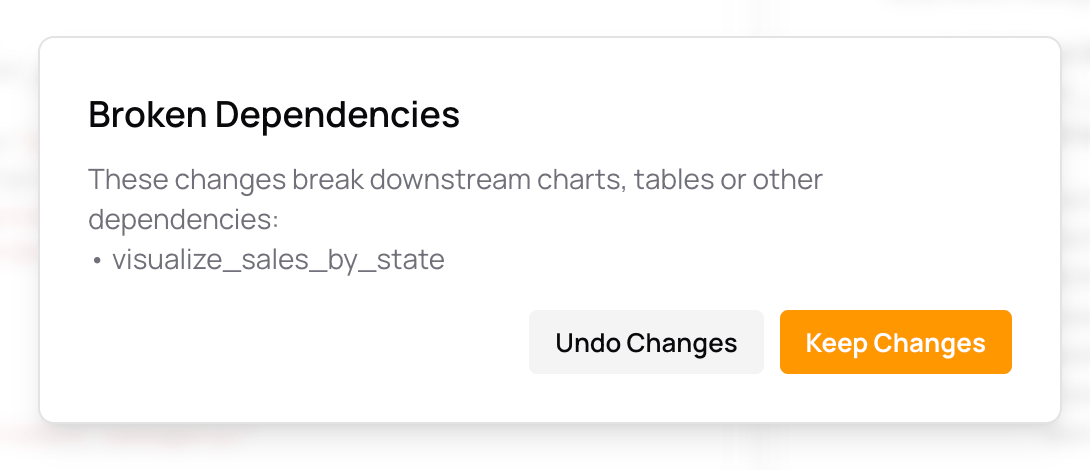

Broken dependency warnings: If you deleted a block or cell that is used somewhere else, Fabi will warn you to ensure that you don’t accidentally break your analysis. These are the types of guardrails that we truly believe contribute to making analytics self-service.

Other interesting reads and content

Why legacy BI isn't working for AI

Marc wrote a guest post for Atlan's Metadata Weekly exploring why so many teams are struggling to get AI working with their existing BI stack—and what the teams figuring it out are doing differently.

Here are a few of the core reasons:

Legacy BI could work with poorly modeled data. You could patch things up at the BI layer, which just compounds the problem over time. Good data modeling is the #1 driver for AI accuracy, and traditional tools weren't designed to enforce it.

Traditional solutions operate on a rigid semantic layer paradigm. For drag-and-drop functionality to work with SQL compilers, you needed these strict layers. But that's not the case anymore. AI actually works best with role-dependent and plain English context. Smaller organizations are finding they're more comfortable having context live in .md files rather than complex proprietary schemas.

A lot of teams try to nail self-service analytics for everyone in the organization rather than taking incremental steps. Most successful organizations start with augmentation of data-literate teams and cut their teeth on these use cases before trying to deploy an AI analyst that can answer every question for the business.

Small and medium organizations are starting to figure out what large enterprises are struggling with. This is natural—the shift to AI-first requires a shift in strategy and thinking, and it's dramatically easier to make this shift when there isn't a deep-rooted foundation to work around.

Looking at what these organizations are doing should give data leaders some good clues of things to come.

Read the full post: The AI Analyst Hype Cycle

Building an MCP server?

We were building our MCP server at the same time as MotherDuck was building theirs, and we swapped some notes. They did a nice job touching on some of the more tedious technical details, with a particular focus on authentication: motherduck.com/blog/dev-diary-building-mcp

This is a must-read for data and engineering teams dabbling in your own MCP server build.

Tip: Interested in an AI data analyst MCP server but don’t want to build one from scratch? We’ve got you, check out our Analyst Agent MCP server.